Implementation

The above algorithm is the general description of the algorithm but not the

implementation that I chose to do. Instead, I chose to use the concept of

"Depth-Map Ambient Occlusion". The reason for using the depth-map based ambient

occlusion is that using hardware we are able to compute the ambient terms much

quicker than the ray tracing algorithm.

Depth-Map Based Ambient Occlusion

Depth-Map Ambient Occlusion approaches the problem

from a different perspective. It renders N viewpoints from outside the model and checks

to see which vertices are visible in the viewpoint, using a depth map, and then

computes the visibility contribution for that viewpoint for those vertices.

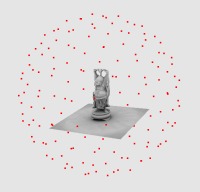

To generate viewpoints for this implementation, I chose to use a icosahedron, and

subdivided it until it reach the number of vertices that were desired for the

processing. This accounts for the strange number of viewpoints used for each of the

images above. The image below shows the viewpoints around the model.

From each of these viewpoints, the model was rendered into a depth map in the

graphics board memory then the depth map was read back into system memory. Each

vertex was projected into the depth map and its visibility was determined. If

it was visible, the cosine weighting was computed and added into the ambient occlusion

term.

Once all the views are computed, the model can be rendered with the ambient occlusion

term as its color information. Obviously this is not the final use for the term, it should

be used as part of the final equation for lighting the surface.

A bent normal can be computed to allow sampling environment maps to allow a nice complex

ambient effect. (A bent normal is just the normal along the direction where the surface

obtained most of its ambient light.)

No matter what the final

use, the model can be manipulated in real-time with this level of lighting information.

References

- Landis, Hayden - "Production Ready Global Illumination", Siggraph 2002

- Whitehurst, Andrew - "Depth Map Ambient Occlusion"

|