|

The concept is not new, but the approach is slightly different than

the other projects out there. The question to be answered by this project is: How close are we to mapping

Renderman geometry and shaders to OpenGL and Cg. Most of the work will focus on mapping the Renderman

shaders to Cg Vertex/Fragment programs. Mapping the Renderman geometry is not as much of an issue, but

we may find that our Cg mapping may impact how we want to represent out geometry when rendering. (For

example it might make sense to subdivide our geometry if it helps in mapping the shader to a Cg shader.)

At a global level, the idea would take the form of a program that could read the RIB file and shaders, and

perform interactive viewing. The idea is not to output OpenGL code and fragment programs, but to provide

a runtime environment. This can be seen in the following diagram:

Previous Work

Many people have or are doing work on these types of concepts. Peercy & Olano did work on deconstructing

Renderman shaders into small operations which they could then use OpenGL to render. In their terminology,

"OpenGL as an Assembly Language." Proudfoot, and the rest of the crew at Stanford, have done a great

job in building a system which is capable of mapping shaders well to different levels of capabilities in

the hardware. My interest in doing this project relates to seeing how much we can do with Cg and the

current state of programmable hardware. I.e. How close are we to being able to easily map Renderman

shaders to interactive hardware display.

Recognized Limitations

Right out of the gate, there are some recognized limitations in this project. Renderman offers quite a

large set of capabilities that we can not hope to have supported in Cg quite yet. No Cg profiles offer

full support for looping. (These limitations are ones that were in place when this project was being

worked on. Early 2003.) This is actually a limitation in the current generations of hardware, which we can

expect to change probably in the coming year or so. Advanced filtering isn't supported in the language

or the texture sampling units, but we may be able to get around this by writing our own samplers

in the fragment programs. (We shall see.)

Leveraging our Architecture

As seen in the diagram above, the idea is that we will build our own proprietary scene graph which

will be traverse using internal software. This allows us to represent the geometry and the shaders in any

manner which makes it efficient to render. Obviously, this could take the form of multiple passes with

different shaders, but that is not my goal. Instead, I am considering that we can use textures as look-ups

for exotic functions and/or pre-compute aspects of shaders which may be constant. Therefore the

scene graph may contain references to textures which won't ever appear in any of the original Renderman

shaders. We can also used multiple permutations of Cg shaders to achieve a job. In a recent unrelated

project, I fould that switching between fragment shaders for slightly different permutations was a great

performance improvement over having one shader do it all. With this project, performance isn't

the only concern, it is also what can be done in a fragment shader and what can be done in the 1024

instructions that we are currently limited to in them.

Accuracy

As part of the project, how close we can get to the correct Renderman output will be gauged. There will

most likely be times when the decision is to get "close enough." We have to remember that this project

is pretty much a "Preview" for Renderman output project.

Approach

The approach to this project is to bite off small pieces and investigate them. Initially some lighting

model and material investigations will be done. Then some more advanced shaders will be examined. All

of the early investigations will be done manually, and then, if the concept proves itself, the scene graph

and converter will be built to do the automatic work.

Differences in Shaders

Renderman (surface) shaders operate pretty much on a per-pixel level, whereas Cg provides vertex and fragment programs.

The difference lies in that for real-time, many applications will use a vertex program to compute lighting

on a per-vertex basis, and then allow the hardware to perform the interpolations between the colors of the

vertices. Obviously, per vertex lighting is not sufficient for this project, unless we take the basic

REYES approach of busting our geometry into micro-polygons. (At some point we may need to subdivide our

geometry, but at the start that is not the goal.) To do per-pixel lighting, we will need to pass the

necessary information (mostly normals) down from the vertex program to the fragment program. There we will

do our work to emulate the renderman shaders. If the information we want to pass from the vertext to the

fragment program varies across the surface, we will need to stuff it in the texture coordinates registers, so the

hardware will perform the necessary interpolations.

Phase 1: The first of many.

For phase 1, the goal is do put together Cg versions of some of the basic lighting models and material shaders. This

will mean putting together the basic structure of what the Cg vertex and fragment programs will look like, and

then implementing a few key shaders. (Plastic, anisotropic lighing, etc.)

First to be ported was the famous plastic.sl:

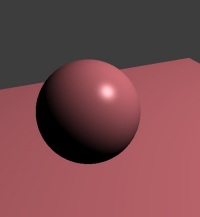

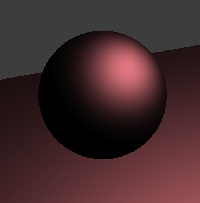

Shaded

Shaded

|

Wireframe

Wireframe

|

The shader consists of a very simple vertex shader which just passes the vertex position and normals down to the fragment

program. The fragment program looks very similar to the Renderman shader. Functions were written to look like ambient,

diffuse, and specular. (Blinn-Phong specular is used.) Lighting and material parameters are passed down as constant

parameters. What did we learn from this first shader?

- It was not too difficult to port this simple shader and get good results.

- Support for many lights may be difficult.

- The global variables which are available anywhere in shaders need to be computed in the Cg shaders.

Examples: I, H, etc. Some of these force us to make sure certain information is sent down to the shader.

- We may end up with a library of code which supports a number of the functions in the shading language (specular, etc.) that would just be auto-inserted in as shaders are converted.

- We may end up with a framework for fragment programs and the parameters they need.

A couple more ported shaders:

|

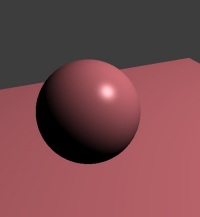

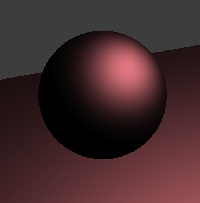

Plastic Shader with Glossy Specular

The image to the left is the Plastic Shader with the LocIllumGlossy Specular computation from the Advanced Renderman book.

It does a good job of illustrating that the fragment program is doing a per pixel lighting rather than per vertex lighting.

The sharpness is set quite high to help illustrate this.

|

|

|

Shiny Metal Shader

The image to the left is of the Shiny Metal shader from the Advanced Renderman book (minus environment sampling.)

It is a simple permutation of the basic plastic shader. To the right is the Nvidia Alien rendered using the

shiny metal shader.

|

|

|

Gooch Non-Photorealistic Technical Illustration Shader

This is a port of Mike King's shader for Gooch, from Renderman.org. (See Gooch et al. in the references for

more on this shader, and its uses.)

|

|

|

Pixar's Beachball Shader

This is the procedural shader from Marc Olano's Multi-Pass Renderman paper. It is shading the NVidia shader

ball, hence the NVidia logo in it (and the odd shape.) This shader is 100% procedural, there are no texture maps

so it shows a bit more use of a fragment program, including trig functions, step functions, and linear interps. Still

a relatively simple shader. My wife, that I introduced to the Pixar shorts, pointed out that the colors were

wrong, so the picture to the right is the ball with colors more aligned with the ball in Luxo Jr.

|

|

|

Anisotropic Lighting (Ward's) Shader:

This shader was a direct port of Larry Gritz's shader which implemented Greg Ward's Anisotropic Lighting.

|

Update 2004:

This project hasn't seen any work since early 2003, so we can probably consider it closed out. The first phase

showed how much was possible, and exposed a few of the limitations at the time. We now have

hardware that can do looping and branching in pixel shaders. HLSL and OpenGL shading languages appear to be displacing Cg.

We also have increased precision in the pixel shaders and frame buffers.

|